When the Berlin Wall came down, the United States was the world's principal exporter of democracy. Not always consistently and not always with positive results, but no other country came close. For most of the time since, technological innovation (much of which took place in America) has been a liberalizing force. But today, the US has become the principal exporter of tools that undermine democracy—not intentionally, but nonetheless as a direct consequence of the business models driving growth. Resulting technological advances in artificial intelligence (AI) will erode social trust, empower demagogues and authoritarians, and disrupt businesses and markets.

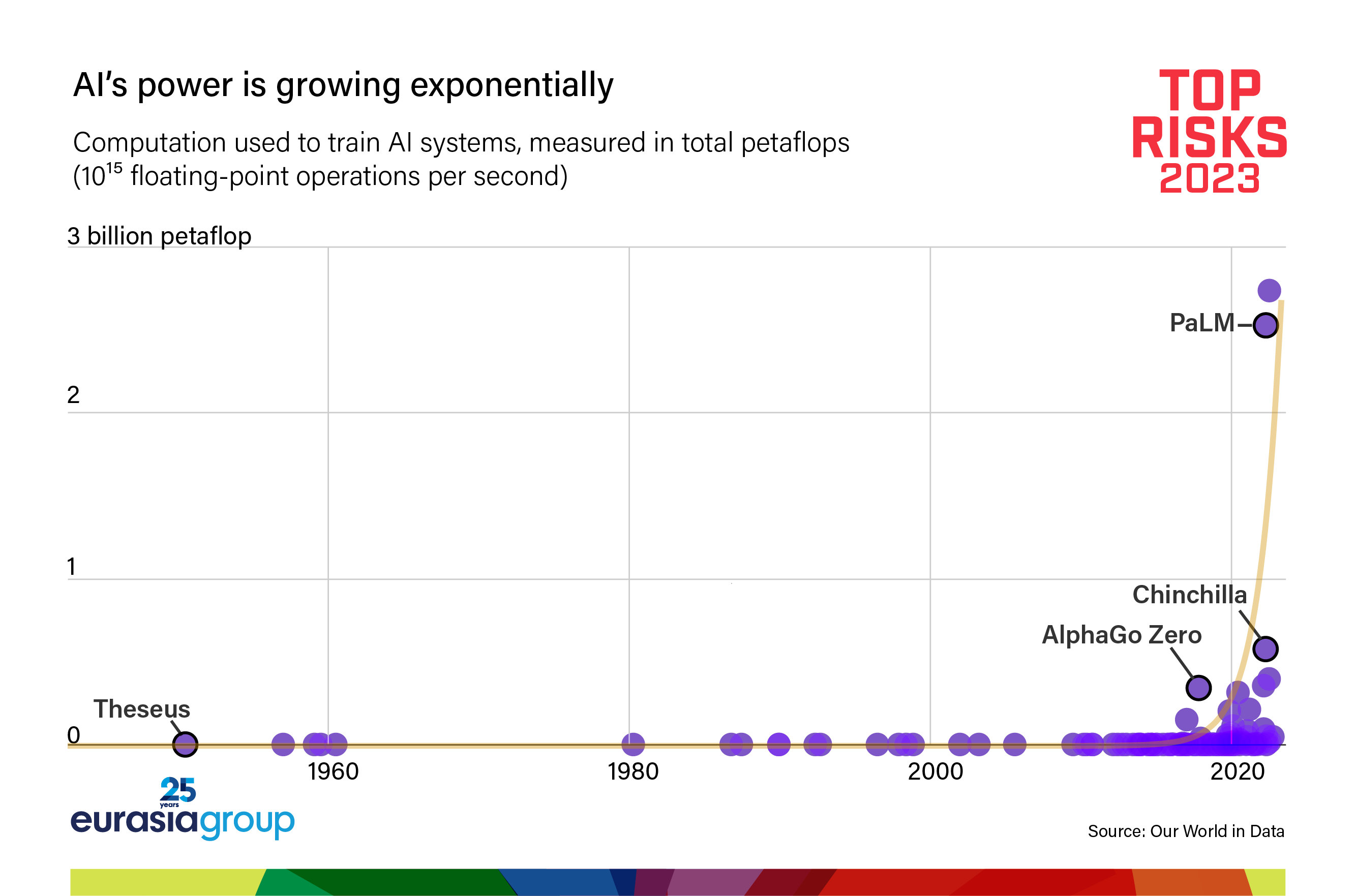

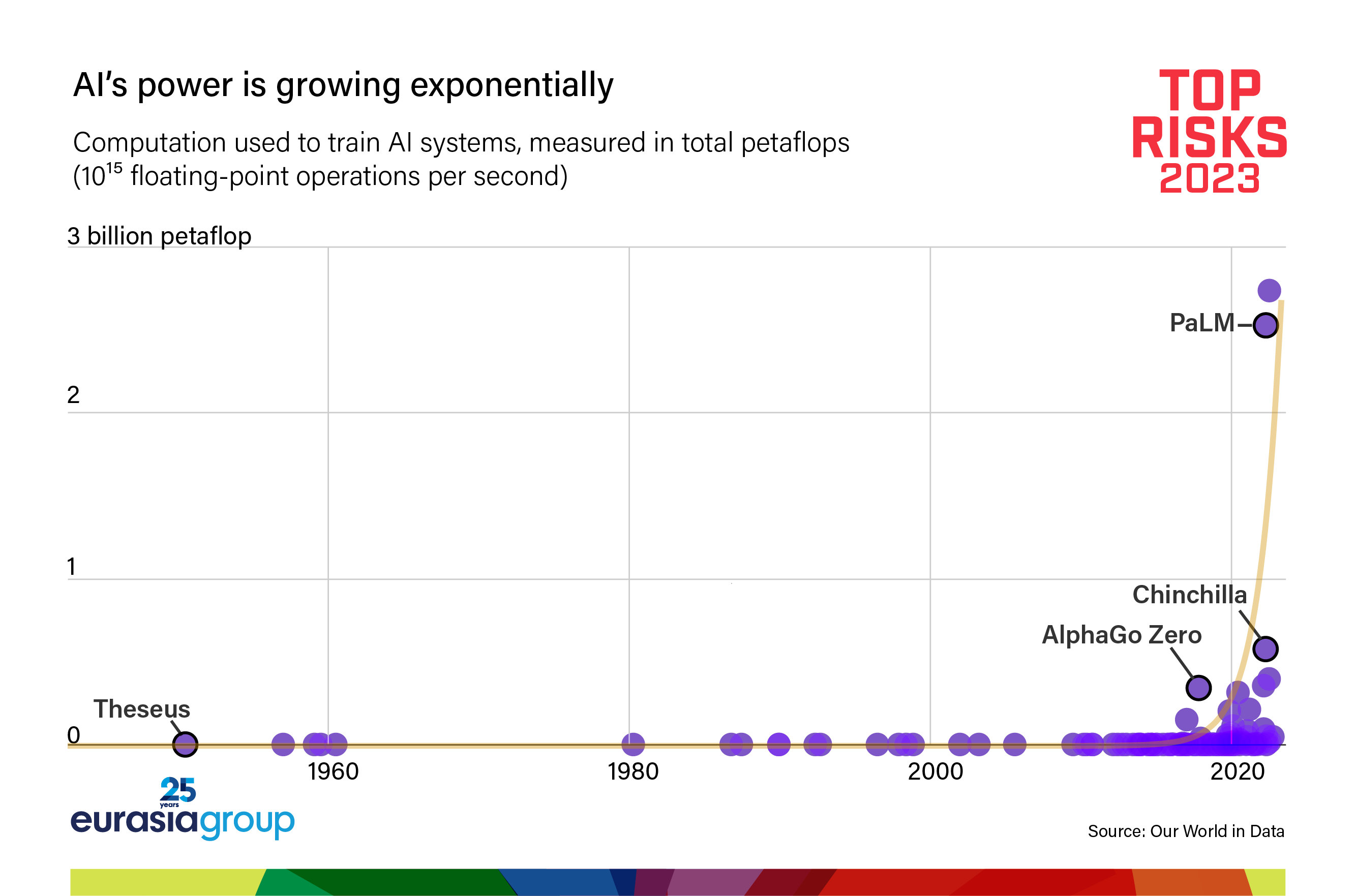

This year will be a tipping point for disruptive technology's role in society. A new form of AI, known as generative AI, will allow users to create realistic images, videos, and text with just a few sentences of guidance. Large language models like GPT-3 and the soon-to-be-released GPT-4 will be able to reliably pass the Turing test—a Rubicon for machines' ability to imitate human intelligence. And advances in deepfakes, facial recognition, and voice synthesis software will render control over one's likeness a relic of the past. User-friendly applications such as ChatGPT and Stable Diffusion will allow anyone minimally tech-savvy to harness the power of AI (indeed, the title of this risk was generated by the former in under five seconds).

These advances represent a step-change in AI's potential to manipulate people and sow political chaos. When barriers to entry for creating content no longer exist, the volume of content rises exponentially, making it impossible for most citizens to reliably distinguish fact from fiction. Disinformation will flourish, and trust—the already-tenuous basis of social cohesion, commerce, and democracy—will erode further. This will remain the core currency of social media, which—by virtue of their private ownership, lack of regulation, and engagement-maximizing business model—are the ideal breeding ground for AI's disruptive effects to go viral.

These breakthroughs will have far-reaching political and economic effects.

Demagogues and populists will weaponize AI for narrow political gain at the expense of democracy and civil society. We've already seen the likes of Trump, Brazil's Jair Bolsonaro, and Hungary's Viktor Orbán leverage the power of social media and disinformation to manipulate electorates and win elections, but technological advances will create structural advantages for every political leader to deploy these tools—no matter where they sit on the political spectrum. Political actors will use AI breakthroughs to create low-cost armies of human-like bots tasked with elevating fringe candidates, peddling conspiracy theories and “fake news,” stoking polarization, and exacerbating extremism and even violence—all of it amplified by social media's echo chambers. We will no doubt see this trend play out this year in the early stages of the US primary season (please see risk #8) as well as in general elections in Spain and Pakistan.

These tools will also be a gift to autocrats bent on undermining democracy abroad and stifling dissent at home. Here, Russia and China lead the way. Building on its subversion of the 2016 US election, as well as disinformation campaigns in Ukraine and Eastern Europe, Moscow will step up its rogue behavior (please see risk #1) with newly empowered influence operations targeting NATO countries. The 2023 Polish parliamentary elections are the most obvious target, but others will be vulnerable, too. Meanwhile, Beijing—which already uses sensors, mobile tech, and facial recognition to track its citizens' movements, activities, and communications—will deploy new technologies not only to tighten surveillance and control of its own society, but also to spread propaganda on social media and intimidate Chinese language communities overseas, including in Western democracies.

The proliferation of AI will have profound implications beyond politics, too. Companies in every sector will contend with new reputational risks when key executives or accounts are impersonated with malicious intent, triggering public relations scandals and even stock selloffs. Generative AI will make it difficult for businesses and investors to distinguish between genuine engagement and sentiment on the one hand, and sabotage attempts by hackers, activist investors, or corporate rivals on the other, with material implications for their bottom lines. Citizen activists, trolls, and anyone in-between will be able to cause corporate crises by generating large enough volumes of high-quality tweets, product reviews, online comments, and letters to executives to simulate mass movements in public opinion. AI-generated content amplified by social media will overwhelm high-frequency trading and sentiment-driven investment strategies, with market-moving effects.

Of course, AI also offers incredible productivity gains that we've only just begun to appreciate (if this wasn't our Top Risks report, we'd be writing more about them). But that's the thing with revolutionary technologies, from the printing press to nuclear fission and the internet—their power to drive human progress is matched by their ability to amplify humanity's most destructive tendencies. The former is embraced and promoted; the latter downplayed and usually ignored … until there's a crisis.

The irony is that America's fertile ground for innovation—nurtured by its representative democracy, free markets, and open society—has allowed these technologies to develop and spread without guardrails, to the point that they now threaten the very political systems that made them possible.